UX/UI Testing – Getting successful test results on a live site.

When testing user flows, Even in a simple funnel, things can get complicated and potentially skew results. There are steps you can take to ensure accurate results.

Clearly understand and define what you’re testing.

Macro and micro-interactions all have an effect – even if they aren’t part of the interaction or path you’re testing. Although conversion is the ultimate goal, how many interactions or processes lie between your test situation and conversion or purchase? Narrow the expected outcome as much as possible to eliminate noise from other interactions. Understand all the interactions within the test path and get a baseline in your metrics. This will be handy when defining what you can measure and reviewing test results. You may be unable to draw a straight line between a successful test and a purchase. Business stakeholders tend to like straight-line connections – This is where you’ll test your ability to present UX/UI value and impact.

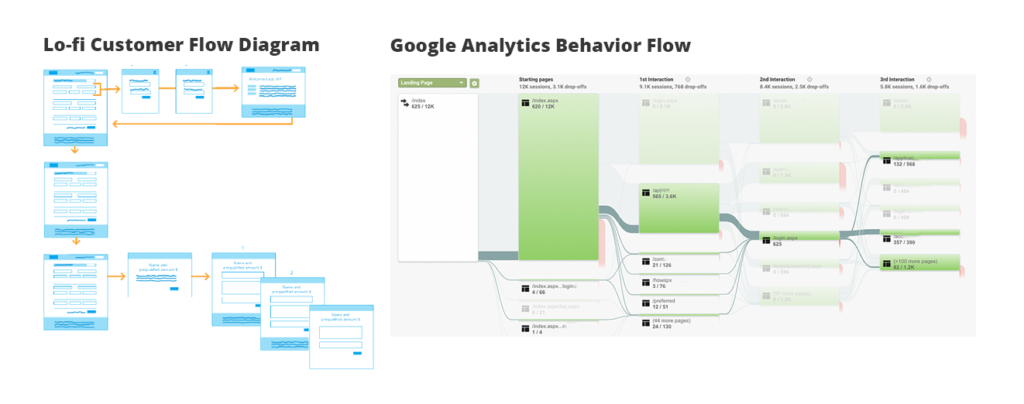

A tip for working with behavior flows: Map out your user flows, then verify with a Google Analytics (G.A.) behavior flow map.

It’s much easier to understand and verify the expected flow on a lo-fi flow diagram or site map, then check the G.A. behavior flow to confirm that it matches. This is extremely helpful when examining flows where people don’t do what’s expected. Your diagram will give you perspective on the alternate paths when users take them.

“In proving foresight may be vain:

The best-laid schemes o’ Mice an’ Men

Gang aft agley,”

– Robert Burns

What if things don’t go as planned?

Consider this scenario: You’ve just launched a new user flow for a client. It could be a shopping cart edit, a signup process update, or an entirely new feature. You release this new beauty into the world, and there’s no improvement or, worse, a drop in conversion. What do you do? Roll back?

Don’t panic.

Before you make a decision, answer a couple of simple questions:

• Is the issue blocking customers from completing the expected task?

• Is the experience damaging the client’s online reputation?

There are three different actions you take from poor initial results:

1.You could roll back the test. Hopefully, you built this failsafe into your test/release plan. However, this is the nuclear option – with a quick rollback, you can resume normal business function, but you learn little or nothing from an aborted test. Save the rollback for a “yes” on questions #1 or #2

2.Review the path using first-person review, recordings, user interviews, Google Analytics, or any other available tool to see if there’s no unintended collateral issue. Even with a well-reviewed release, introducing new code can cause conflicts with existing code which can affect the user but isn’t directly related to the test. If it’s something that can be fixed, do that and move on with testing. Remember to edit the timing of the test to eliminate the affected period or at least note it in your results.

3.If you’ve reviewed it and the issue isn’t related to action #2, accept that we run tests to see how people will respond. Keep tracking closely! Sometimes, a new interaction replacing a familiar one can cause hesitation. It may take time to build comfort and familiarity with customers.

Testing is the lifeblood of XD. We can research and make educated guesses about what customers will do – but people will surprise you. The key is learning something – whether good or bad. The only failed test is one with no new insights.